2. Testing Throughout the Software Life Cycle – ISTQB Foundation

2. Testing Throughout the Software Life Cycle

2.1 Software Development Models

- Sequential: Waterfall, V-model

- Iterative-Incremental: Agile, Scrum

2.1.1 Explain the relationship between development, test activities and work products in the development life cycle, by giving examples using project and product types

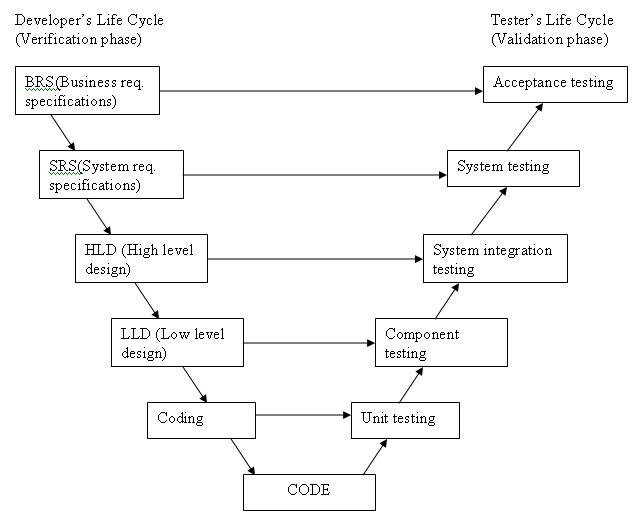

V-model

V Model overcomes problems with Waterfall model where testing was done toward the end of a project.

from

Iterative and incremental models

V Model overcomes problems with Waterfall model where testing was done toward the end of a project.from http://istqbexamcertification.com/wp-content/uploads/2012/01/V-model.jpg

Iterative and incremental models include RAD (Rapid Application Development) and Agile.

- RAD: Develop and test discrete functions one at a time

- Agile: collaboration of users with development and testing time

- RUP and Scrum

2.1.2 Recognize the fact that software development models must be adapted to the context of project and product characteristics

2.1.3 Recall characteristics of good testing that are applicable to any life cycle model

- For every development activity, there is a corresponding testing activity

- Each test level has test objectives specific to that level

- Analysis and design of tests for any test level should begin during the correspond development activity

- Testers should be involved in reviewing documents as soon as drafts are available in SDLC.

2.2 Test Levels

- Component (unit)

- Component integration (program)

- System

- System integration

- Acceptance

Acceptance testing may include:

- user acceptance testing

- operational acceptance testing

- contract and regulation acceptance

- alpha and beta testing

- acceptance testing: Formal testing with respect to user needs, requirements, and business processes conducted to determine whether or not a system satisfies the acceptance criteria and to enable the user, customers or other authorized entity to determine whether or not to accept the system.

- operational acceptance testing:Operational testing in the acceptance test phase, typically performed in a (simulated) operational environment by operations and/ systems administration staff focusing on operational aspects, e.g. recoverability, resource-behavior, installability and technical compliance. See also operational testing.

Verification: meets defined requirements

Validation: meet’s user needs and expectations, which may be different to defined requirements. Testing often done by customers/end users.

2.2.1 Compare the different levels of testing: major objectives, typical objects of testing, typical targets of testing (e.g., functional or structural) and related work products, people who test, types of defects and failures to be identified

Four levels of testing:

- Component (unit) testing: may use stubs and drivers

- Stub: a mock object that is substitute for real code modules

- Driver: calls other modules for testing; useful for integration testing

- May use Test-driven development. e.g. using JUnit

- Integration testing

- Big-Bang integration

- Incremental integration

- System testing: integrating a system with others

- Acceptance testing

- Validation testing

- UAT

- Operational acceptance test

- Contract acceptance test

- Compliance acceptance test

- Alpha testing

- Beta testing

2.3 Test Types

- Black box: functional and non-functional testing

- White box: structural testing

- Change-related: confirmation (re-testing), regression

2.3.1 Compare four software test types (functional, non-functional, structural and change-related) by example

Functional: testing particular functions using SRS or User Requirements document

Non-functional: aspects like performance, reliability

Black-box: based on specification

white-box: based on structure

Quality characteristics

functionality, which consists of five sub-characteristics: suitability, accuracy,

security, interoperability and compliance; this characteristic deals with func

tional testing as described in Section 2.3.1;

• reliability, which is defined further into the sub-characteristics maturity

(robustness), fault-tolerance, recoverability and compliance;

• usability, which is divided into the sub-characteristics understandability,

learnability, operability, attractiveness and compliance;

• efficiency, which is divided into time behavior (performance), resource uti

lization and compliance;

• maintainability, which consists of five sub-characteristics: analyzability,

changeability, stability, testability and compliance;

• portability, which also consists of five sub-characteristics: adaptability,

installability, co-existence, replaceability and compliance.

2.3.2 Recognize that functional and structural tests occur at any test level

- test basis: All documents from which the requirements of a component or system can be inferred. The documentation on which the test cases are based. If a document can be amended only by way of formal amendment procedure, then the test basis is called a frozen test basis.

All test types (change-related, structural, non-function, functional) can occur at all test levels (unit, integration, system, acceptance), although it is context-dependent.

2.3.3 Identify and describe non-functional test types based on non-functional requirements

- Performance

- Load

- Stress

- Usability

- Maintainability

- Reliability

- Portability

2.3.4 Identify and describe test types based on the analysis of a software system’s structure or architecture

- Top-down development: use stubs to replace unifinished components

- Bottom-up development: use drivers to integrate components

2.3.5 Describe the purpose of confirmation testing and regression testing

- Confirmation testing is re-testing when a test originally fails and the defect has supposedly been fixed

- Regression testing is used to help determine whether side effects have been introduced

2.4 Maintenance Testing

- Change to deployed software system or its environment

- Triggered by: modification, migration, retirement

- Extensive regression testing required

2.4.1 Compare maintenance testing (testing an existing system) to testing a new application with respect to test types, triggers for testing and amount of testing

2.4.2 Recognize indicators for maintenance testing (modification, migration and retirement)

2.4.3 Describe the role of regression testing and impact analysis in maintenance