Discover Quality Requirements with the Mini-QAW – Requirements Engineering Magazine

Advertisement

IT solutions for your business!

Profound consulting and innovative solution concepts lead to an optimization

of your core processes.

www.adesso.de/en

adesso IT solutions!

Process optimization via profound consulting and innovative solution concepts.

www.adesso.de/en

Good quality requirements help software engineers make the right architectural design decisions. Unfortunately, collecting requirements is not always easy. The Quality Attribute Workshop (QAW) helps teams effectively gather quality requirements but it can be costly and cumbersome to organize. The mini-QAW is a short (a few hours to a full day) workshop designed for inexperienced facilitators and is a great fit for Agile teams. Due to the shortened agenda and visual tools that we use in the workshop, we find that we can discover a fair number of quality requirements in half a day or less. If you want to get started right now, visit our mini-QAW webpage for materials to run a workshop (http://bit.ly/mini-qaw).

Mục lục bài viết

Quality requirements and software architecture

There could be millions of ways to design a software system from the same set of functional requirements. Features are binary. The software either does something correctly or it doesn’t. Software architects spend most of their time deciding how the software will provide that functionality. To help software architects in this task, they consider quality requirements in addition to functionality. Quality requirements, sometimes also called quality attributes or non-functional requirements, characterize the externally visible properties of the system in the context of some bit of functionality. Learn the quality requirements for your system and you’ll stand a chance of designing an architecture that truly solves your stakeholders’ needs (Keeling 2017).

The Quality Attribute Workshop (QAW), introduced by the Software Engineering Institute (SEI) in 2003, describes a repeatable method for gathering quality requirements (Barbacci et al. 2003). During the two-day QAW, trained facilitators help a development team collaborate with stakeholders to gather detailed quality requirements as a precursor to software architecture design. The workshop produces a prioritized list of quality attribute scenarios, which support writing specific and measurable quality requirements.

The QAW is a great method but requires an investment few agile teams are willing to make. The QAW is also only as good as the facilitator who leads the workshop. Quality requirements are so essential to the success of a software system that forgoing structured methods for gathering them does not seem prudent. We created the mini-QAW to overcome deficiencies in the traditional QAW and make a workshop focused on quality requirements more palatable for agile teams.

Creating the Mini-QAW

The mini-QAW has two goals. First, reduce the time and effort needed to gather good quality requirements. Second, make it easier for novice facilitators to host a successful workshop. These goals are achieved by reducing the scope of the traditional workshop (see the QAW and mini-QAW agendas in the sidebar) and by introducing a quality attribute taxonomy to guide the workshop.

The mini-QAW agenda skips the business/mission and architectural plan presentations as well as the identification of architectural drivers from the traditional QAW. We assume participants know roughly what value the software should deliver. Similarly, in our experience, most teams discuss architectural drivers regularly so presenting them during the workshop is unnecessary. When there are questions, a brief side-discussion with interested stakeholders quickly clears up any confusion.

Sidebar: Comparing the workshop agendas

Mini-QAW Agenda

- Introduce the mini-QAW and quality attributes (10 minutes)

- Introduce quality attributes and the quality attributes taxonomy (15 minutes)

- Facilitate the stakeholder empathy map activity (15 minutes) – new in mini-QAW

- Facilitate scenario brainstorming (30 minutes to 2 hours)

- “Walk the Quality Attribute Web” activity, or

- Structured brainstorming

- Prioritize raw scenarios using dot voting (5 minutes)

- Refine scenarios (as time permits)

- While time remains, remainder is homework

Schedule a follow-up meeting to review the refined quality attribute scenarios with stakeholders. This meeting usually lasts about an hour.

Traditional QAW Agenda

- Welcome and introductions (15 min)

- Quality Attribute Workshop overview (15 min)

- Business drivers (30 min) – skipped in mini-QAW

- System architecture (30 min) – skipped in mini-QAW

- Scenario generation and prioritization (2 hours) – shortened in mini-QAW

- Scenario refinement (2 hours) – shortened or skipped in mini-QAW

- Wrap up and review scenarios (1 hour) – shortened in mini-QAW

The traditional QAW is also followed by a results presentation. Set as a one or two day meeting with the facilitators, stakeholders, and the architecture team.

A significant amount of workshop time is saved by moving most of the scenario refinement out of the workshop. We still aim to get part of the scenario refinement done within the workshop, but in our experience this is not a good use of stakeholders’ time and something the architect can do after the workshop. Many stakeholders struggle formulating details during the workshop. Changing this activity to a homework assignment means the architect can spend more time discovering facts and talking to stakeholders. A review one to two weeks after the workshop ensures stakeholders agree with and understand the scenarios. The review process also enables new stakeholders to participate, which is important when key stakeholders could not attend the initial workshop.

Time pressure is one challenge in organizing a workshop. It is rare for all stakeholders to be available at the same time and place. The stakeholder empathy map exercise helps overcome the absentee stakeholder problem. In this activity, participants are shown how to think about requirements from the perspective of absent stakeholders, to ensure at least some of their concerns are considered.

Quality Attribute Web – The Mini-QAW Visualization

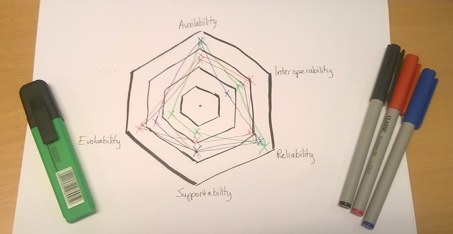

Beyond changes to the agenda, we also introduce the quality attribute web as a visual tool for the workshop. The quality attribute web is a radar chart created from a taxonomy of quality requirements likely to be important for the type of system under development. The axes of the web are used to score the importance of a quality attribute and as a visualization for capturing requirements during the workshop. Marks closest to the center indicate low priority or interest, and marks towards the outer border indicate a higher score. This way we can visualize differences in stakeholder priorities, or how different systems require different qualities. The visualization of the web also enables us to use standard workshop techniques such as dot-voting for prioritization.

One risk of using a pre-determined quality attribute taxonomy to drive the workshop is that it limits the discussion to the included quality attributes. In other words, the selection of the attributes frames the assignment. This may in turn limit creativity. In our experience this risk is low and the benefits from increased participant engagement make the taxonomy and web well worth it. Still, if this were a concern, then facilitators may build the web fully or partially at workshop time. This can be seen by the dots on the left upper axes in the accompanying picture, Figure 1.

Figure 1 – Sample empty quality attribute web

Figure 1 – Sample empty quality attribute web

The Activities in the Mini-QAW

There are five primary steps in the Mini-QAW. Each step is driven by a collaborative, visual activity designed to engage stakeholders and encourage discussion about a system’s quality requirements.

Stakeholder Empathy Map

The Stakeholder Empathy Map is a brainstorming and visualization exercise that gives absent and under-represented stakeholders a voice during the workshop. During the exercise, participants empathize with absent stakeholders and specify quality concerns from the absent stakeholder’s perspective. As a result, participants experience first-hand the conflicting priorities and requirements that may exist in the system’s requirements. This awareness makes it easier to deal with tension among the participants on conflicting priorities and requirements later in the workshop.

To build a stakeholder empathy map, participants start by creating a list of stakeholders for the system. Next, each participant picks a stakeholder to empathize with and reflects on this stakeholder’s requirements. Finally, the participant records a best-effort estimate of the stakeholder’s quality priorities on the quality attribute web.

All of this happens before the participants get the space to share their own requirements. The intention is to avoid projection of the participant’s wishes or needs on the absent stakeholder represented. One key benefit of the exercise is that it is possible to run a meaningful workshop with a smaller set of stakeholders. This activity also makes it feasible to run a workshop for the same system in multiple locations with subsets of stakeholders, removing travel overhead and potentially getting access to stakeholders that otherwise could not or would not participate.

Steps to create a stakeholder empathy map:

- Brainstorm: who are the stakeholders in the software?

- All participants pick a stakeholder who is not in the workshop.

- Estimate quality priorities for the chosen (absent) stakeholder.

- Record estimates on flip charts or provided worksheets.

- (optional) The facilitator guides the participants to reflect on the results and areas of conflict.

Scenario Brainstorming

The scenario brainstorming exercise in the mini-QAW results in raw quality attribute scenarios, just like the similar exercise in the traditional QAW workshop. The goal is to discover what the participants need and expect from the system. During scenario brainstorming, participants should not worry about creating a formal quality attribute scenario (see side-panel). Facilitators should invite participants to think about events (stimuli), behavior (responses), and changes to the environment in which the system operates.

Raw quality attribute scenarios informally describe stakeholder concerns. One way to formulate these is through concrete examples of a demonstration of quality. For example, we need the system to work during business hours (an availability requirement); or we expect a peak load of 150 requests per second (a performance requirement). Ideas for features and functional requirements usually come up during the workshop. Don’t suppress these, instead put them in a dedicated area outside of the quality attribute web for later review.

The base form for this exercise is to walk through each axis on the quality attribute web. Pause on each quality; consider whether it is relevant; and if it is, spend about five to seven minutes brainstorming and recording raw scenarios. In some groups, active listening questions are enough to keep the scenario ideas flowing, others may benefit from structured help. Since the web is built from a quality attribute taxonomy, a taxonomy-based questionnaire can be used to help participants adequately explore the problem space. Such questionnaires can also help novice facilitators consistently deliver good workshops. The SEI has example questionnaires available for the traditional QAW.

Steps for scenario brainstorming:

- Start with a quality on the web, ask “Is this quality attribute relevant to our system?”

- If Yes, spend 5 minutes brainstorming scenarios / concerns on that quality.

- Write raw scenarios on sticky notes and place on the quality attribute web.

- After 5 minutes, move to the next quality.

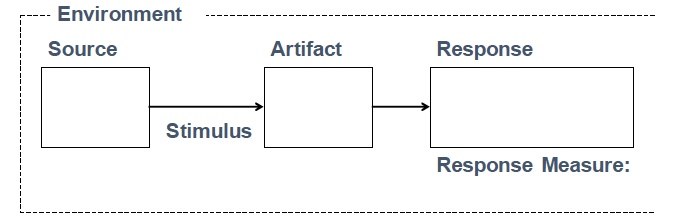

Sidebar: Quality Attribute Scenarios

We document our quality requirement with quality attribute scenarios. Each scenario is specific to a quality attribute and consists of six parts (Bass et al. 2003):

- Source of stimulus: anything that generates a stimulus to the software system. For example, a user, another application, embedded software in a network device or hardware.

- Stimulus: the actual event the software system is subjected to, or the condition that arises with the stimulus.

- Environment: the situation in which the stimulus occurs, described as conditions. For example, the software system could work on a batch job, experience a network partition, experience a peak load, or everything is running as normal.

- Artifact: defines what part of the system receives the stimulus. Of course, this could be the whole system, but it could also be a specific component.

- Response: the reaction of the system to the stimulus, what happens after the stimulus arrives.

- Response measure: how can we measure the response during testing and decide whether it is acceptable?

Raw Scenario Prioritization

Prioritizing raw scenarios helps the software architect focus on what is important to the stakeholders so the architect can make the right trade-offs when designing the architecture. There is also no use in refining scenarios that stakeholders do not find useful or important. Finally, prioritization may expose areas of disagreement requiring further exploration during the workshop.

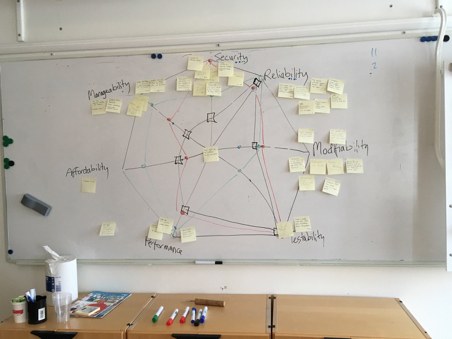

The mini-QAW uses visual dot voting to establish priorities. Both the quality attributes (web axes) and raw quality scenarios are prioritized. Based on the number of scenarios, participants are assigned a set number of dots. Participants may distribute their dot allocation as they wish: all dots on one scenario; one dot on each scenario that is important to them; or anything in between. Voting for quality attributes works in a similar way.

After voting, the quality attribute web contains a lot of visual information. Facilitators can use this information to identify inconsistencies and gaps in the requirements analysis. Figure 2. shows an example from one workshop. In this example, the result of the empathy exercise is shown in the colored lines running through the web. We can also see that manageability, security, reliability, and modifiability received most of the scenarios, which aligns with what most stakeholders cared about. In this example one stakeholder strongly valued affordability but few raw scenarios were identified. This may be an opportunity for follow-ups and further analysis. Additionally, the facilitator should look for cluttered areas that might benefit from a quick consolidation round.

Steps for dot voting:

- Each stakeholder gets:

- n / 3 + 1 dots for raw quality scenarios where n = # scenarios

- 2 votes to choose “top quality attribute”

- Participants place dots, taking turns or at the same time.

Figure 2 – Visual cues from the quality attribute web

Figure 2 – Visual cues from the quality attribute web

Scenario Refinement

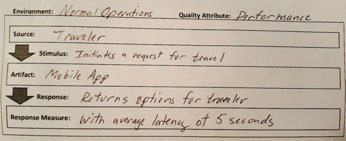

The scenario refinement step is very important to get from raw scenarios to testable requirements. The traditional QAW rightfully spends significant time in the agenda on this activity. Since the mini-QAW is a time-constrained workshop, we only continue this activity until time runs out. This usually leaves several high or medium priority raw scenarios untouched. The architect, facilitator, or requirement engineer will refine the remaining scenarios after the workshop as homework.

Figure 3 – quality attribute scenario worksheet example

Figure 3 – quality attribute scenario worksheet example

In a typical mini-QAW, the facilitator guides the team in refining scenarios. Start with the top voted scenarios (those with most dots) and use a visual worksheet (Figure 3) to create specific, measurable scenarios. Note any assumptions made regarding response measures, scenario context, and anything else that may help understand and test the scenario later. The facilitator or architect should ask questions to clarify requirements. Usually in this activity, like in the brainstorming activity, functional requirements disguised as quality attributes also show up: be aware, add them to a list, and review later.

Steps for scenario refinement:

- Start with high priority scenario.

- Fill out the visual worksheet, identifying/specifying each of the components of a quality attribute scenario.

- Complete or put a time limit on each scenario; continue until workshop time runs out.

Scenario Review

The workshop facilitator or architect formalizes the scenarios after the workshop. During this homework, the scenarios may change slightly and no longer reflect exactly what the stakeholders had in mind. Therefore, it is important to review the scenarios with the stakeholders.

After the final tweaks to the scenarios, present the refined scenarios to your stakeholders during a review to gather their feedback. We recommend giving stakeholders the opportunity to read the material before you meet them for the review. During the review, you may discover previously unstated requirements – record these. You could find out the scenario is easily misunderstood – in this case try to explain how the scenario helps the stakeholder do a job or achieve a goal. The refinements to scenarios may change their scope: one becomes multiple or vice versa. Therefore, it is useful to verify the priority of such scenarios.

Steps for scenario review:

- Present top and architecturally significant scenarios to stakeholders.

- Gather feedback & check that scope matches expectations.

- Trawl for new requirements (!).

Experience so Far

The mini-QAW method has been used successfully by several groups throughout the world. It is finding its place as a standard tool among many software architects. Despite the shortened format, we find that we can easily discover 30 quality requirements through 50+ rough scenarios in less than half a day. The mini-QAW format will not give results of the same depth and breadth as a traditional QAW, but is a great tool for smaller, low-risk or iterative projects.

Hints and Tips for Facilitators

We want more software projects to deliver long-term value, which requires a sound architecture. Without a proper understanding of quality requirements, it is very difficult to build such a foundation. Therefore, we want you to be successful with the mini-QAW and wrap up with some hands-on hints and tips.

- Prepare, prepare, prepare. You’ll feel more confident and get more out of the workshop. Know what you will say, and practice the logistics of the workshop.

- Match your tools to the stakeholders. Some people dislike sticky notes – be creative and find an alternative. For remote workshops using video conferencing, you will want to use online collaborative tools. Skype (for Business) works – for example, you can collaboratively annotate PowerPoint slides.

- Choose the right taxonomy for your system: your experience is needed to pick quality attributes that are relevant for the quality attribute web. Don’t use too many – about 5 or 6 properties is a good number. Remember you can brainstorm just-in-time if needed or leave some axes on the web open.

- Give each stakeholder a voice: ensure everyone is heard. This may mean you must split your workshops to ensure everyone feels comfortable to speak. For example, in a strongly hierarchical culture, subordinates may not want to speak up with their superiors in the room.

- Speed up your ATAM architecture evaluation using the mini-QAW when there is no proper record of quality requirements.

Conclusion

The mini-QAW is an additional tool in one’s toolbox, but of course no silver bullet for quality requirements engineering. We have neither conducted a formal review of effectiveness, nor done a direct comparison to effectiveness of the traditional QAW. Yet, it has been useful to us, and increasingly to others, as a quick way to document key quality requirements.

We shortened the traditional QAW workshop by tailoring some original activities and deferring part of the work as homework. The core mini-QAW activities include scenario brainstorming using a “quality attribute web”, creating stakeholder empathy maps, visual voting, and refinement using a visual quality attribute scenario template. Refreshed activities and a tuned agenda create fast, effective, and fun workshops.

If you want to get started, you will find resources to run the workshop freely available on our webpage: http://bit.ly/mini-qaw. There you will also find further material (slides, handouts, videos, etc.) relating to our talks. The initial mini-QAW method was the best presentation award winner at SATURN 2014 1. Thijmen presented the stakeholder empathy exercise and advice for remote facilitation at SATURN 2015 2. We ran two tutorials with the combined material: one at SATURN 2016 3 and one at ICSA 2017 in Gothenburg. The ICSA tutorial was the first time we presented in Europe (de Gooijer 2017).

The method is still young and we very much appreciate hearing about your feedback and experiences. Quality attribute taxonomies and scenario discovery questionnaires help us all, so let’s collect them together!

References

Bibliography

Give Feedback

Your feedback is important to the RE community! Therefore we are keen on receiving your opinion about the RE Magazine, on individual articles or on the RE topic in general.

Suggest missing topic

You are missing articles on a particular topic? Please let us know so we can perhaps publish a matching article on it soon. We appreciate your input very much!

Become an author

You are invited to submit an article! In order to make an initial assessment of the ideas you intend to contribute, we are looking forward to receiving your abstract first.

Author’s profile

Thijmen works as IT architect at Kommuninvest acting as technical lead for its digitalization initiative and growing CI/CD and Agile practice in the company. His work on the mini-QAW started in his role as researcher at ABB, where he worked on software architectures with colleagues around the globe. He authored publications at leading international software conferences, receiving two best paper awards. Thijmen graduated cum-laude in software engineering from VU University in the Netherlands and Malardalen University in Sweden.

Michael Keeling is a software engineer at LendingHome and the author of Design It! From Programmer to Software Architect. In his career so far, he has worked on a variety of interesting software systems including IBM Watson, enterprise search applications, financial platforms, and Navy combat systems. Michael has a master’s degree in software engineering from Carnegie Mellon University and a bachelor’s degree in computer science from the College of William and Mary. Contact him via Twitter @michaelkeeling or his website, https://www.neverletdown.net

Will Chaparro is a software development manager in IBM’s Watson Group. He spent over 5 years designing and building complex enterprise search solutions as a managing consultant. Prior to IBM he spent 11 years as a software engineer. He has a BS in Computer Science from the University of Pittsburgh.

Related Articles

RE for Testers

Why Testers should have a closer look into Requirements Engineering

Read Article

Product Owner in Scrum

State of the discussion: Requirements Engineering and Product Owner in Scrum

Read Article

Toward Better RE

The Main Thing is Keeping the Main Thing the Main Thing

Read Article

Show all related articles