How to Write a Software Engineering Performance Review | Clockwise

Many people believe that continuous feedback can substitute for a software engineering performance review. Would that it were true! It’s absolutely the case that just-in-time feedback encourages immediate growth and reduces surprises during reviews. But it’s no substitute for regular 360-degree reviews.

Just-in-time feedback is for one-off occurrences. Performance reviews are about telling a story of what happened over the last period and what needs to happen in the coming period in order for your report to stay employed or get promoted.

“The fact is that giving such reviews is the single most important form of task-relevant feedback we as supervisor can provide,” Andy Grove wrote in High Output Management. And he further notes that these reviews should happen regardless of your organization’s size or structure.

In The Manager’s Path, Camille Fournier explains that 360-degree reviews help you understand:

- Other people’s evaluations of your report

- Others’ view of their strengths, weaknesses, and accomplishments

- The patterns and trends in your report’s performance

{{try-free=”/in-house-ads”}}

Despite how crucial performance reviews are, more than half of executives surveyed by Deloitte said they didn’t believe their performance management approach increased engagement or improved performance.

Most of us have had frustrating performance reviews. “Giving performance reviews is a very complicated and difficult business and we, managers, don’t do an especially good job at it,” Grove wrote. Engineering Lead Gergely Orosz describes what bad a software engineering performance review can look like:

- Vague and disconnected. All filler, no killer. You get a pat on the head, and maybe bonus and pay numbers, but no specifics or areas for improvement. No mention of your most impactful work. Just side quests. You leave feeling like your manager doesn’t know what you do. And won’t help you get promoted. You leave feeling like they spent five minutes on it and don’t really care about your career.

- Biased and unfair. You walk out with your blood boiling. Every example of where you need to improve is one-sided and ignores key details. You suspect your gender, ethnicity, etc. may be influencing your manager’s perception. Or office politics might be playing an outsize role. When you try to provide context or challenge, your manager gets defensive.

- New and shocking. Everything negative you hear is totally new to you. Instead of bringing things up in your one-on-one, apparently they’ve been saving it all for this conversation. You walk out afraid that you have no idea where you stand or how you’re being perceived.

A poorly handled performance review will erode trust between an Engineer and their Manager more quickly than almost anything else, sending them heading to a recruiter faster than you can type “needs improvement.”

Why, with so much at stake, do reviews go so poorly so often? Because great performance reviews are hard to write and deliver! They take time to prepare. We all tend to focus on the most recent rather than the most important data points. And we all suffer from various blind spots, biases, and peccadillos. To help overcome these challenges, I’ve written a fairly comprehensive how-to guide for crafting performance reviews that are fair, help you build trust with your team, and motivate them to work hard to move the needle for your organization.

Here are the five steps to preparing a great Software Engineering performance review.

Mục lục bài viết

1. Set your criteria

In Coding Sans’ State of Software Development survey, 27% of tech leaders reported that they don’t use any metrics to measure performance. Yikes. “The biggest problem with most reviews is that we don’t usually define what it is we want from our subordinates, and if we don’t know what we want, we are surely not going to get it,” Grove wrote.

Of those that do, these are the most popular:

But there are many more criteria to take into account. I’ve categorized suggestions from Software Developer and Blogger Michael Shpilt and CEO and Machine Learning PhD David Kofoed Wind into three categories: core job responsibilities, communication, and extra mile.

Core job responsibilities

These are signals the software developer is committed to delivering value at their job.

- Do they deliver features and changes in production that meet stakeholder expectations?

- Do they finish tasks and code quickly?

- Does their code have few bugs, good style, and thorough documentation?

- Do they make it easy to figure out where they are on their tasks?

- Do they do a lot of code reviews? Do their peers enjoy their reviews?

- Do they demonstrate user empathy?

- Do they follow through on the things they said they’d do?

- Do they put in the extra hours when needed for finishing a feature or handling a crashed server?

- Do they proactively solve relevant problems without being asked? Even when they’re caused by someone else?

- Do they suggest and/or implement reasonable technical additions or upgrades to the current tech stack?

- Do they show initiative by proactively offering to build new features or integrate new tech?

- Do they offer to do things like code refactoring or start a unit test project/system test?

- Do they take it upon themselves to learn new technologies, techniques, and topics?

Communication

These questions get at how effectively the software developer is communicating with stakeholders.

- Do they proactively alert stakeholders and suggest workarounds when they run into problems or expect to miss a deadline or do they go home without telling anyone?

- Do they make sure they’re understood when they don’t get a response from a stakeholder or assume everything is fine?

- Do they follow-up and ensure other people know when they’re the bottleneck?

Extra mile

These signals get at the developer’s commitment to the organization as a whole.

- Do they volunteer for tasks outside their job like organizing a team offsite, giving a lecture to the team on something they learned, starting a diversity initiative, etc?

- Do they get involved in the recruiting process, contributing meaningfully to outreach, referrals, screening calls, interviews, and code tests?

- Do they take an active interest in onboarding new hires?

Once you decide what your metrics are, start tracking them now, not when review time is coming up. Consistently taking notes helps you find patterns and offer concrete examples. “You’re curt” is ad-hominem and subjective. A specific example can make the feedback more meaningful and harder to argue with. If you’ve been taking notes, you can say something like “It seemed like your response to Mary at the third GTM meeting where you said you hated her design was curt. This is a problem because we need our developers and designers to have a good working relationship.” Good notes also help you understand their progress and tell a story about what your report is accomplishing as a part of the organization.

Criteria established, now you need to decide on a performance rating scale.

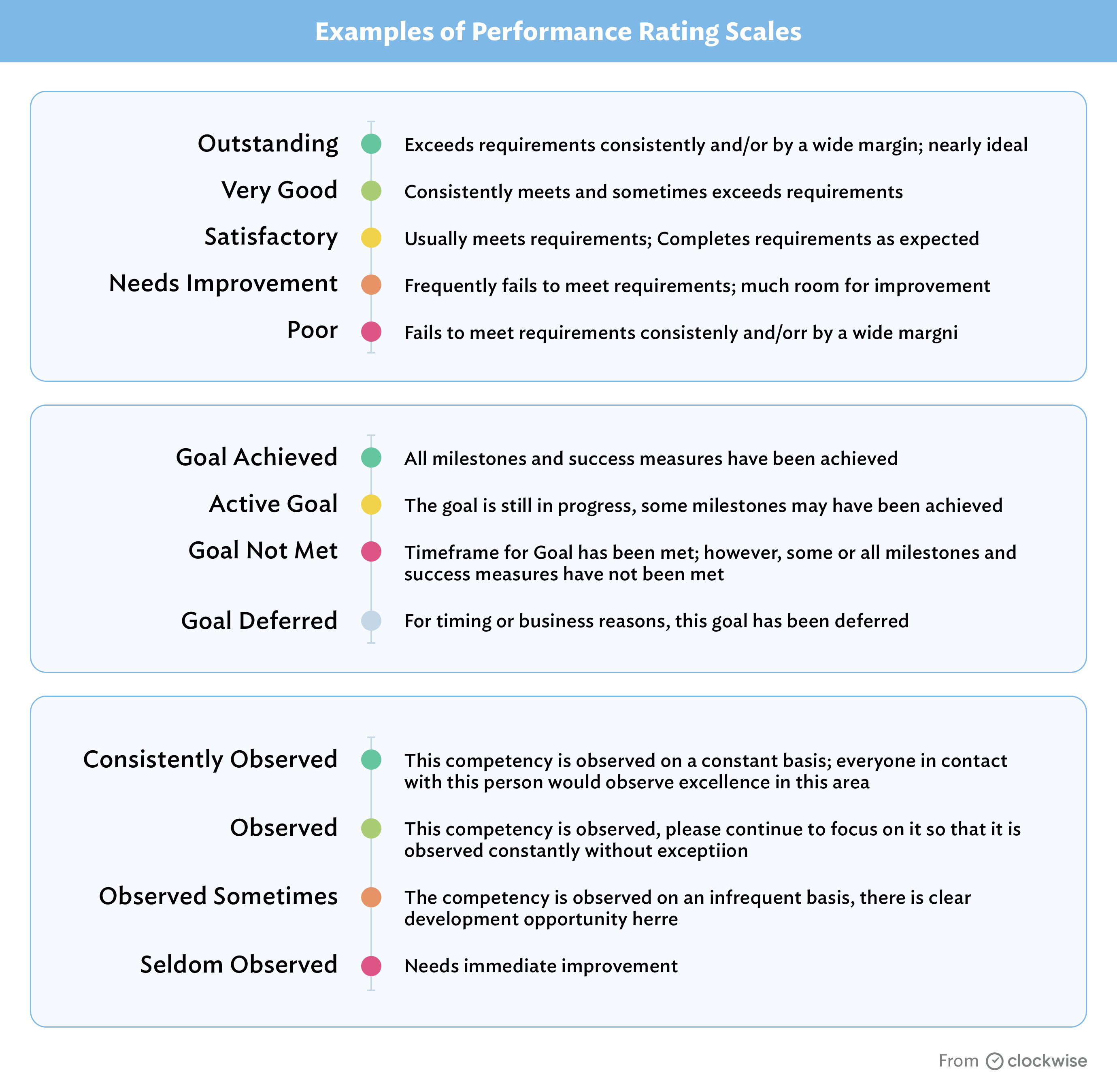

There are lots of options for this. Here are a few:

Of course your reports want to know how they’re doing in order to improve. But they also want to get promoted and paid more. Great performance reviews should be part of clearly defined levels and expectations for promotion and pay. Orosz points to tech companies who have made their career progression frameworks public, including:

One last thing on criteria. Be sure to communicate the criteria to your reports well ahead of time so they know how they’ll be evaluated and can ramp up performance appropriately. Remember, the entire point of the exercise is to improve and reward performance. When your developers know what great performance looks like, they’re better able to perform well.

2. Decide on a cadence

Having nailed down your criteria, next you need to decide on a cadence. Annual, biannual, and quarterly are common cadences.

Coding Sans recommends three kinds of reviews, all with their own cadence:

- Annual compensation decision

- Quarterly or per-project performance snapshot

- Weekly check-in

One note on cadence is that it’s likely a good idea to separate performance reviews from compensation meetings. Orosz notes that people tend to tune out as soon as they hear their compensation numbers, making the rest of the meeting less impactful.

3. Decide who to include

Now that you know what you’re looking to measure and how often you’re measuring, you need to know who to talk to about your direct report. Are you letting People Ops or HR direct the process, or is the Manager running the show?

In The Manager’s Path, Camille Fournier describes a traditional 360-degree review as integrating feedback from team members, direct reports, peers, and a self-review. You might also want to include stakeholders such as product managers, project managers, your manager, QA, and maybe others as well.

Regardless of whether it’s an official part of the review, it’s not a bad idea to grab coffee with the people your report works closely with. What are their opinions about your Engineer’s strengths and areas for growth?

4. Write an outline

By now you should have set your criteria, gathered feedback from everyone you’re going to include, looked at pull requests and JIRA tickets, and given your report their marks. Now it’s time to start on the key takeaways.

Set aside more time than you think you’ll need to write your review. Start by reading all the collected feedback and summarizing it. Then narrow down the feedback to the most important points that you absolutely need your Engineer to understand.

One way to organize your outline is in a Looking Back and Looking Ahead section.

Looking back

Add up to three especially important or impactful accomplishments into Looking Back. While numeric ratings are helpful and lend an air of objectivity to the proceedings, it’s just as important to acknowledge achievements. “Those strengths are what you’ll use to determine when people should be promoted, and it is important to write them down and reflect on them,” Fournier writes.

Orosz’s biggest disappointment with performance reviews has never been where numbers are concerned – even if they were below what he expected. Rather, he was most disappointed when his manager failed to acknowledge his work or reflect on his achievements and growth. “While managers don’t always have control of the numbers, they always have full control of how they say – or don’t say – ‘Thank you,’” Orosz writes.

When delivering this part, “Don’t let people skip over the good stuff in order to obsess over areas for improvement, as many will want to do,” Fournier writes. Consider asking whether you left anything out. And if you did, be sure to add it. Nothing is more demotivating than having your hard work ignored. It also signals that you’ve been taking notes and spent time preparing for the review. If you gather peer feedback, consider including some quotes.

Looking ahead

Looking Ahead is for two or three areas for improvement. If you can think of specific things they can do to improve, include that. Examples include reading a book, attending training, or volunteering with an organization.

Another must-have is how your developer is performing for their level, and what needs to happen for them to be considered for promotion. If you’re struggling to think of areas for improvement, that’s a good signal your Engineer is ready for more challenge, and a promotion.

Once you get your outline written, consider running it by your manager. This can be a good gut-check against bias. Plus, they may remember information you missed. And it helps give them visibility into your developers.

5. Follow the one-sleep rule

Engineering Manager Dan Ubilla recommends the “one-sleep rule.” Step away from the review for a while so you can come back to it with fresh eyes. The next day, re-read your notes to be sure you didn’t leave out anything important, read the review again to be sure there are no confusing, duplicative, or conflicting parts. If you have multiple reports, try comparing their reviews side-by-side to ensure you’re evaluating everyone on the same scale and using similar language.

Go forth and evaluate

It’s true that most Engineering Managers suck at giving performance reviews. In fact, most Engineering Managers also suck at running an effective one on one meeting.

But you are not most Engineering Managers! You read 2,000 words on how to write a great one, putting you way ahead of the pack already.

To further distinguish yourself, decide ahead of time what you’ll be evaluating your Engineers based on, share it with them and let them know when they’ll be evaluated and who you’ll be gathering feedback from. Once you have your feedback, write an outline distilling the most important areas of excellence and opportunities for improvement. Then sleep on the whole thing before presenting.

If you do this, you’ll have all the ingredients necessary for a performance evaluation that’s fair, clear, and actionable.